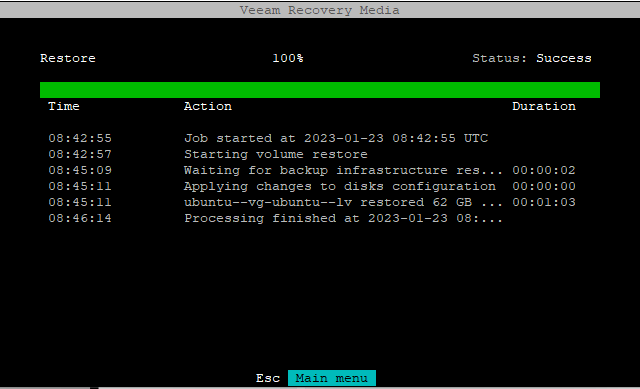

If you followed the previous part, you should now have restored all data, all partitions, the LUKS container, the LVM, the bootloader, etc.

If you reboot now, the system will not boot successfully because the bootloader (GRUB) is looking for the LUKS container with the UUID that it had before the restore. But you have created a new LUKS container and it now has a new UUID.

If you have not already done so, save the device name of your operating system disk in a variable. To find out the device name of the operating system disk, see part 1. In my case, the operating system disk is /dev/sda.

If you wrote down the original UUID like I suggested in the previous parts, you can now proceed by changing the UUID and you can skip the next step. If you did not write down the UUID, proceed here.

Verifying partition alignment and sector/cluster/block sizes

You should now verify that everything has been restored in exactly the way it was before. Confirm that all the sector/cluster/block sizes match with that they were before the restore. If sector/cluster/block sizes are chosen wrongly or if partitions are misaligned it can negatively impact performance. If you did not follow my guide, and you restored everything from within recovery UI, most likely it will not match. See the next part for more information on this.

Checking partition alignment

First, you can check if all three partitions are aligned properly.

veeamuser@veeam-recovery-iso:~$ sudo parted $OSdisk align-check optimal 1

1 aligned

veeamuser@veeam-recovery-iso:~$ sudo parted $OSdisk align-check optimal 2

2 aligned

veeamuser@veeam-recovery-iso:~$ sudo parted $OSdisk align-check optimal 3

3 aligned

veeamuser@veeam-recovery-iso:~$

Checking sector/cluster/block sizes

The goal here is to verify that everything has been restored in exactly the way it was before the restore. If you wrote it down or saved it as shown in part 4, this should be easy to confirm. If you didn't write it down, I will give some pointers, but if you want to be 100% sure, you can always install Ubuntu on the system, write down the setup (sector/block sizes, etc) that Ubuntu installer creates, and then wipe it and proceed with the restore.

If you don't know what the sector/cluster/block sizes were before the restore consider this:

- During my testing, the block size of the /boot and /boot/efi partitions were always restored by Veeam exactly as they were before the restore. You can assume that the block size of /boot and /boot/efi partitions is also the block size that all the other partitions and volumes should have.

- It should always be the same for all partitions, volumes and file systems. In practice, this should be 512 throughout or 4096 throughout because Ubuntu installer will choose either 512 or 4096.

- Though I have not tested all configurations, it should be like this:

- Disk is 512n (logical/physical sectors 512/512): Block size should be 512 throughout.

- Disk is 512e (logical/physical sectors 512/4096): Block size should be 4096 throughout.

- Disk is 4Kn (logical/physical sectors 4096/4096): Block size should be 4096throughout.

- In other words: It looks like Ubuntu installer always sets the block size to what is reported as the physical sector size by the disk.

The steps will be the same as in Part 4 - Getting ready for bare-metal restore, except now you have a baseline to compare it to.

Check the LUKS container properties.

admin01@testlabubuntu01:~$ sudo cryptsetup luksDump ${OSdisk}3 | grep -E 'sector|UUID'

UUID: d8073181-5283-44b5-b4dc-6014b2e1a3c2

sector: 4096 [bytes]

admin01@testlabubuntu01:~$

Of interest here is the sector size of the LUKS container and the container/partition UUID, but you might want to take note of some other properties here as well.

admin01@testlabubuntu01:~$ sudo cryptsetup luksDump ${OSdisk}3 | grep Cipher

Cipher: aes-xts-plain64

Cipher key: 512 bits

admin01@testlabubuntu01:~$

Also note the partition layout. Of interest here is the logical and physical sector size.

admin01@testlabubuntu01:~$ sudo gdisk -l $OSdisk

GPT fdisk (gdisk) version 1.0.8

...

Sector size (logical/physical): 512/4096 bytes

...

First usable sector is 34, last usable sector is 266338270

Partitions will be aligned on 2048-sector boundaries

...

Number Start (sector) End (sector) Size Code Name

1 2048 2203647 1.0 GiB EF00

2 2203648 6397951 2.0 GiB 8300

3 6397952 266336255 123.9 GiB 8300 LUKS

admin01@testlabubuntu01:~$Though rather unimportant (unless you intend to restore to a disk with different logical/physical sector sizes), you can check the cluster size of the FAT32 formated EFI System Partition (ESP, here: /dev/sda1). I did not find a way to do this in Veeam recovery media, but if you created your own Ubuntu live based recovery media, as I have shown previously, you can install mtools.

sudo apt install mtools

And then use minfo. Look for sector size and cluster size.

admin01@testlabubuntu01:~$ sudo minfo -i ${OSdisk}1 | grep -E 'sector size|cluster size'

Hidden (2048) does not match sectors (63)

sector size: 512 bytes

cluster size: 8 sectors

admin01@testlabubuntu01:~$

The cluster size is bytes is sector size * cluster size. here: 512 * 8 = 4096. You can ignore the warning 'Hidden (2048) does not match sectors (63)'. It just means that the partition is properly aligned.

Next is the /boot partition (EXT4), here /dev/sda2. Block size of /boot is also unimportant by the way, because it does not impact performance.

veeamuser@veeam-recovery-iso:~$ sudo tune2fs -l ${OSdisk}2 | grep "^Block size:"

Block size: 4096

veeamuser@veeam-recovery-iso:~$

As far as my testing goes, the sector/block size of the ESP and /boot partition will always be same after the restore as it was before the restore because Veeam restores them as they were before. To a fault actually, because when I tried to restore a backup from a 512e disk onto a 4Kn disk, this led to Veeam not properly restoring the ESP partition.

Next, check the block size of the LVM mapper device. This should be the same as the LUKS container sector size.

veeamuser@veeam-recovery-iso:~$ sudo blockdev --getss /dev/mapper/ubuntu--vg-ubuntu--lv

4096

veeamuser@veeam-recovery-iso:~$

Or you can use tune2fs.

veeamuser@veeam-recovery-iso:~$ sudo tune2fs -l /dev/mapper/ubuntu--vg-ubuntu--lv | grep "^Block size:"

Block size: 4096

veeamuser@veeam-recovery-iso:~$

You can also check with stat.

admin01@testlabubuntu01:~$ sudo stat -f /

File: "/"

ID: f16df925830148c0 Namelen: 255 Type: ext2/ext3

Block size: 4096 Fundamental block size: 4096

Blocks: Total: 15909803 Free: 13416161 Available: 12599880

Inodes: Total: 4063232 Free: 3949218

admin01@testlabubuntu01:~$

If you have saved this info in a file before, like I showed in part 4, you can now compare it directly to the restored system.

Enter the RestoreInfo folder (here: /mnt/home/admin01/RestoreInfo).

cd /mnt/home/admin01/RestoreInfo

Save the current properties.

sudo cryptsetup luksDump ${OSdisk}3 > luksDump-restore

sudo gdisk -l $OSdisk > gdisk-restore

sudo minfo -i ${OSdisk}1 > minfo-part-1-restore

sudo tune2fs -l ${OSdisk}2 > tune2fs-part-2-restore

sudo blockdev --getss /dev/mapper/ubuntu--vg-ubuntu--lv > blockdevubuntu--vg-ubuntu--lv-restore

sudo tune2fs -l /dev/mapper/ubuntu--vg-ubuntu--lv > tune2fs-ubuntu--vg-ubuntu--lv-restore

sudo stat -f / > stat-root-file-system-restore

Compare it like so. For the luksDump command, the only thing that should be different is the UUID and a few crypt properties.

examples:

diff luksDump luksDump-restore

diff gdisk -w gdisk-restore

veeamuser@veeam-recovery-iso:/mnt/home/admin01/RestoreInfo$ diff luksDump luksDump-restore

6c6

< UUID: d8073181-5283-44b5-b4dc-6014b2e1a3c2

---

> UUID: f802c718-8ba1-4487-94af-13c382cf6372

24c24

< PBKDF: argon2id

---

> PBKDF: argon2i

28,29c28,29

< Salt: 93 0e 2b 73 e2 58 d2 89 68 61 09 ec 4b 76 a4 c9

< 55 18 49 c7 85 4d d9 2c 5a 18 3f 49 5d 16 31 9d

---

> Salt: a9 70 0a 3f 65 b9 39 82 f1 64 f0 66 f2 66 f5 98

> a9 2b 9c a0 09 04 a9 49 57 6c 8f f0 0d 8e 25 7a

39,43c39,43

< Iterations: 129902

< Salt: b1 b2 5b 55 0e 16 eb d3 33 57 62 f7 a8 45 97 96

< 6d e1 3b c0 cb e1 d7 6f 9f f8 7b 82 c7 8e 90 ea

< Digest: de 73 b8 89 70 17 65 f5 b0 5c f0 21 14 e0 cb 21

< e8 25 74 5b 8f a1 14 dc bf 54 89 a2 b0 53 fd 2f

---

> Iterations: 141852

> Salt: 4a e5 4e aa e5 f7 7b d3 c8 88 6f 08 6e 45 dc b1

> d3 2f c5 7a 00 63 8e d4 4c e1 87 c9 2c d3 ea 70

> Digest: c4 fe 0f a0 53 34 8f eb 67 b8 a4 50 54 76 17 13

> a9 7a fd 64 34 be ef 88 f0 a3 cd df 59 fe d8 d2

veeamuser@veeam-recovery-iso:/mnt/home/admin01/RestoreInfo$

veeamuser@veeam-recovery-iso:/mnt/home/admin01/RestoreInfo$ diff gdisk -w gdisk-restore

1c1

< GPT fdisk (gdisk) version 1.0.8

---

> GPT fdisk (gdisk) version 1.0.3

23c23

< 3 6397952 266336255 123.9 GiB 8300

---

> 3 6397952 266336255 123.9 GiB 8300 LUKS

veeamuser@veeam-recovery-iso:/mnt/home/admin01/RestoreInfo$

You can do this for every file.

Finding out the original LUKS container UUID

If you exited recovery UI, after restoring the LVM, as I showed in the previous part, the LUKS container will still be open and the LVM will be open too. In that case, you can proceed straight to mounting the root file system. If you rebooted for some reason, you now need to open the LUKS container.

You can check if the LUKS container is opened by looking for a mapper device.

veeamuser@veeam-recovery-iso:~$ ls /dev/mapper

control ubuntu--vg-ubuntu--lv

'luks-a6ef0f16-f6ef-46d8-ace7-071cbc3cec58\x0a'

veeamuser@veeam-recovery-iso:~$

In this case, you can see that both the LUKS container and the LVM are opened. If they are not opened, open them now.

veeamuser@veeam-recovery-iso:~$ sudo cryptsetup luksOpen ${OSdisk}3 dm_crypt-0

Enter passphrase for /dev/sda3:

veeamuser@veeam-recovery-iso:~$

The same goes for the LVM. If it isn't open, if you can't see it as /dev/mapper/ubuntu--vg-ubuntu--lv, open it. If the LUKS container has been opened successfully, the LVM should be found.

veeamuser@veeam-recovery-iso:~$ sudo vgscan

Found volume group "ubuntu-vg" using metadata type lvm2

veeamuser@veeam-recovery-iso:~$

Open it.

veeamuser@veeam-recovery-iso:~$ sudo vgchange -ay ubuntu-vg

1 logical volume(s) in volume group "ubuntu-vg" now active

veeamuser@veeam-recovery-iso:~$

You should now be able to mount the root file system.

Mounting the root file system

sudo mount /dev/mapper/ubuntu--vg-ubuntu--lv /mnt

Now, look at /mnt/etc/crypttab to find out, what the UUID was before the restore.

veeamuser@veeam-recovery-iso:~$ cat /mnt/etc/crypttab

dm_crypt-0 UUID=d8073181-5283-44b5-b4dc-6014b2e1a3c2 none luks

veeamuser@veeam-recovery-iso:~$

Setting the original LUKS container UUID

With that done, unmount the root file system.

sudo umount /dev/mapper/ubuntu--vg-ubuntu--lv

Now, close the LVM.

veeamuser@veeam-recovery-iso:~$ sudo vgchange -an ubuntu-vg

0 logical volume(s) in volume group "ubuntu-vg" now active

veeamuser@veeam-recovery-iso:~$

Close the LUKS container. If you opened the container through recovery UI, it will be named something like this (check the name in /dev/mapper/).

sudo cryptsetup luksClose /dev/mapper/luks-a6ef0f16-f6ef-46d8-ace7-071cbc3cec58\x0a

But in my case, it is called dm_crypt-0.

sudo cryptsetup luksClose /dev/mapper/dm_crypt-0

Get the current LUKS container UUID.

veeamuser@veeam-recovery-iso:~$ sudo cryptsetup luksUUID ${OSdisk}3

a6ef0f16-f6ef-46d8-ace7-071cbc3cec58

veeamuser@veeam-recovery-iso:~$

Compare this to the UUID in /mnt/etc/crypttab: d8073181-5283-44b5-b4dc-6014b2e1a3c2. Although the UUIDs will be different for you, they do not match. This is the reason why GRUB would not be able to find the LUKS container, if you rebooted now. The LUKS container would not be opened during boot. The root file system would not be found.

Now set the UUID of the LUKS container to what it was before the restore. Do not attempt to do this the other way around, by updating /mnt/etc/crypttab with the new UUID. You would have to update the GRUB config if you did.

veeamuser@veeam-recovery-iso:~$ sudo cryptsetup luksUUID --uuid d8073181-5283-44b5-b4dc-6014b2e1a3c2 ${OSdisk}3

WARNING!

========

Do you really want to change UUID of device?

Are you sure? (Type uppercase yes): YES

veeamuser@veeam-recovery-iso:~$

Optionally, you can now confirm, that the UUID has been updated and matches that of the UUID in /mnt/etc/crypttab.

veeamuser@veeam-recovery-iso:~$ sudo cryptsetup luksUUID ${OSdisk}3

d8073181-5283-44b5-b4dc-6014b2e1a3c2

veeamuser@veeam-recovery-iso:~$

Remove the recovery media and reboot.

sudo reboot

If everything went well, you will be asked for the LUKS password during boot.

Followed by the login prompt.

This is it. For some troubleshooting tips, the explanation as to why you should not use the UI to re-create LUKS, LVM, and some other things, see the next parts.