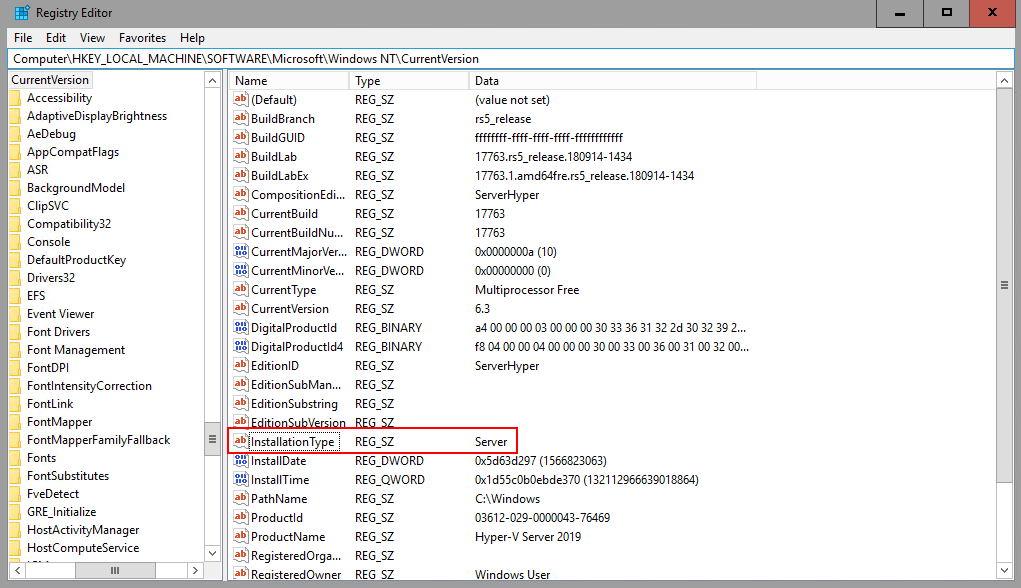

In this blog, I will show how to install QNAP SnapAgent (QNAP's hardware VSS provider driver). The problem is that the installer refuses to install on Hyper-V Server (the free product).

Tuesday 31 January 2023

Installing QNAP SnapAgent on Hyper-V Server 2019

Saturday 28 January 2023

7 - Ubuntu with LUKS: Backup and Restore with Veeam Part 7 - Bare-metal restore: fixing LUKS container UUID

If you followed the previous part, you should now have restored all data, all partitions, the LUKS container, the LVM, the bootloader, etc.

If you reboot now, the system will not boot successfully because the bootloader (GRUB) is looking for the LUKS container with the UUID that it had before the restore. But you have created a new LUKS container and it now has a new UUID.

If you wrote down the original UUID like I suggested in the previous parts, you can now proceed by changing the UUID and you can skip the next step. If you did not write down the UUID, proceed here.

Verifying partition alignment and sector/cluster/block sizes

Checking partition alignment

Checking sector/cluster/block sizes

- During my testing, the block size of the /boot and /boot/efi partitions were always restored by Veeam exactly as they were before the restore. You can assume that the block size of /boot and /boot/efi partitions is also the block size that all the other partitions and volumes should have.

- It should always be the same for all partitions, volumes and file systems. In practice, this should be 512 throughout or 4096 throughout because Ubuntu installer will choose either 512 or 4096.

- Though I have not tested all configurations, it should be like this:

- Disk is 512n (logical/physical sectors 512/512): Block size should be 512 throughout.

- Disk is 512e (logical/physical sectors 512/4096): Block size should be 4096 throughout.

- Disk is 4Kn (logical/physical sectors 4096/4096): Block size should be 4096throughout.

- In other words: It looks like Ubuntu installer always sets the block size to what is reported as the physical sector size by the disk.

admin01@testlabubuntu01:~$ sudo cryptsetup luksDump ${OSdisk}3 | grep Cipher

Cipher: aes-xts-plain64

Cipher key: 512 bits

admin01@testlabubuntu01:~$

admin01@testlabubuntu01:~$ sudo gdisk -l $OSdisk

GPT fdisk (gdisk) version 1.0.8

...

Sector size (logical/physical): 512/4096 bytes

...

First usable sector is 34, last usable sector is 266338270

Partitions will be aligned on 2048-sector boundaries

...

Number Start (sector) End (sector) Size Code Name

1 2048 2203647 1.0 GiB EF00

2 2203648 6397951 2.0 GiB 8300

3 6397952 266336255 123.9 GiB 8300 LUKS

admin01@testlabubuntu01:~$

admin01@testlabubuntu01:~$ sudo minfo -i ${OSdisk}1 | grep -E 'sector size|cluster size'

Hidden (2048) does not match sectors (63)

sector size: 512 bytes

cluster size: 8 sectors

admin01@testlabubuntu01:~$The cluster size is bytes is sector size * cluster size. here: 512 * 8 = 4096. You can ignore the warning 'Hidden (2048) does not match sectors (63)'. It just means that the partition is properly aligned.

Next is the /boot partition (EXT4), here /dev/sda2. Block size of /boot is also unimportant by the way, because it does not impact performance.

veeamuser@veeam-recovery-iso:~$ sudo tune2fs -l ${OSdisk}2 | grep "^Block size:"

Block size: 4096

veeamuser@veeam-recovery-iso:~$As far as my testing goes, the sector/block size of the ESP and /boot partition will always be same after the restore as it was before the restore because Veeam restores them as they were before. To a fault actually, because when I tried to restore a backup from a 512e disk onto a 4Kn disk, this led to Veeam not properly restoring the ESP partition.

Next, check the block size of the LVM mapper device. This should be the same as the LUKS container sector size.

veeamuser@veeam-recovery-iso:~$ sudo blockdev --getss /dev/mapper/ubuntu--vg-ubuntu--lv

4096

Finding out the original LUKS container UUID

If you exited recovery UI, after restoring the LVM, as I showed in the previous part, the LUKS container will still be open and the LVM will be open too. In that case, you can proceed straight to mounting the root file system. If you rebooted for some reason, you now need to open the LUKS container.

You can check if the LUKS container is opened by looking for a mapper device.

control ubuntu--vg-ubuntu--lv

'luks-a6ef0f16-f6ef-46d8-ace7-071cbc3cec58\x0a'

veeamuser@veeam-recovery-iso:~$

In this case, you can see that both the LUKS container and the LVM are opened. If they are not opened, open them now.

Enter passphrase for /dev/sda3:

veeamuser@veeam-recovery-iso:~$

The same goes for the LVM. If it isn't open, if you can't see it as /dev/mapper/ubuntu--vg-ubuntu--lv, open it. If the LUKS container has been opened successfully, the LVM should be found.

Found volume group "ubuntu-vg" using metadata type lvm2

veeamuser@veeam-recovery-iso:~$

Open it.

1 logical volume(s) in volume group "ubuntu-vg" now active

veeamuser@veeam-recovery-iso:~$

Mounting the root file system

Setting the original LUKS container UUID

veeamuser@veeam-recovery-iso:~$ sudo cryptsetup luksUUID --uuid d8073181-5283-44b5-b4dc-6014b2e1a3c2 ${OSdisk}3

WARNING!

========

Do you really want to change UUID of device?

Are you sure? (Type uppercase yes): YES

veeamuser@veeam-recovery-iso:~$

6 - Ubuntu with LUKS: Backup and Restore with Veeam Part 6 - Bare-metal restore: LUKS, LVM, root file system

If you followed part 3, you should still be in the recovery media environment. You should have restored the GPT table, the EFI system partition and the /boot partition.

The issue with Veeam and LUKS containers

/dev/sda

Re-creating LUKS partition

Re-creating LUKS container

Notes on LUKS container sector size

- 512n (logical/physical: 512/512 bytes)

In this case, it would be best, to set the sector size to 512 and cryptsetup should do this by default, if no --sector-size parameter is supplied. I did not test what Ubuntu installer uses in this case. - 512e (logical/physical: 512/4096 bytes)

Here, Ubuntu installer will use a sector/block size of 4096 for all partitions. (ESP, here: /dev/sda1, /boot, here: /dev/sda2, LUKS here: /dev/sda3). But cryptsetup will default to 512 which is why it is necessary to set --sector-size=4096 if you want to re-create the LUKS container exactly as it was before the restore. - 4Kn (logical/physical: 4096 /4096 bytes)

I have not tested this, but presumably, Ubuntu installer will also default to 4096 and cryptsetup will also default to 4096. You can also set --sector-size=4096.

Re-creating LUKS container

Restoring LVM and root file system

Only, if you get 'The device is too small'

Proceed with restoring LVM and root file system

Monday 23 January 2023

5 - Ubuntu with LUKS: Backup and Restore with Veeam Part 5 - Bare-metal restore: EFI and bootloader

I previous parts, I showed how to use Veeam to back up Ubuntu that is installed inside a LUKS container. I also showed how to prepare for bare-metal restore. In this part, I will show how to restore the backup to bare-metal.

The challenge here is that Veeam does not back up, nor restore LUKS containers but I previously showed how to back up the contents of the LUKS container, and therefore you would be right to assume that it should be fine if you can manually re-create the LUKS container. But first things first. You should now have the following things ready.

- backup

- hardware that the backup will be restored to

- recovery media

- you have knowledge of the

- operating system disk physical properties

- partition layout

- file system sector/block sizes

- LUKS container properties

But I did not do the steps described in previous parts while I was still able to access my Ubuntu computer and now, I cannot access the Ubuntu installation anymore! What can I do?

- Veeam Recovery media

Go back to the previous part and create a recovery media. You will find the information on how to download a Veeam generic recovery media or how to create your own Ubuntu live based recovery media. - Information on disk sector size, partition table and LUKS container, etc.

Not in this part, but in the next part, you will be using some of the information collected previously. If you don't have that, look out for some additional information that I have added to the guide.

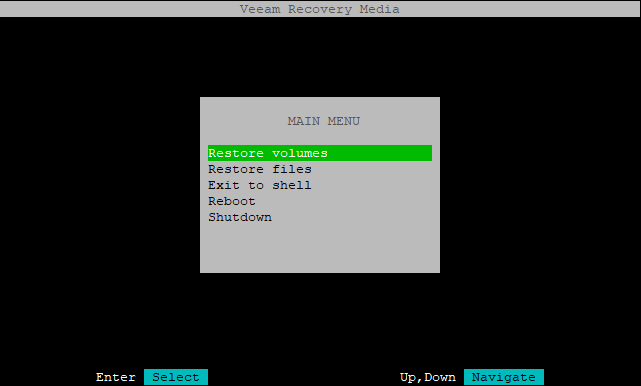

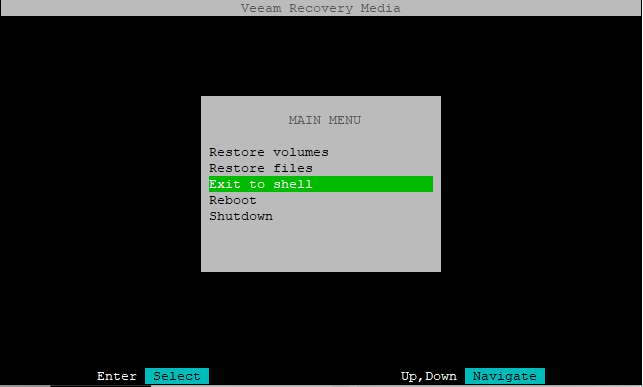

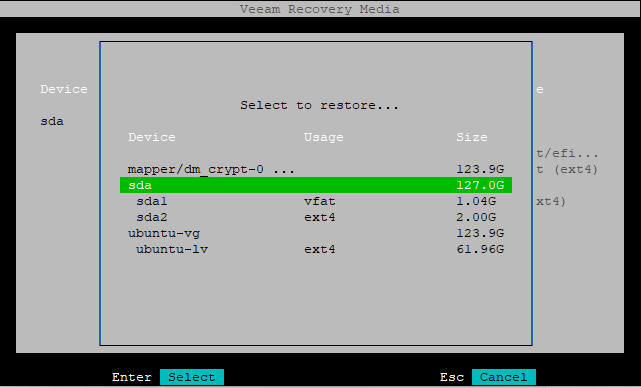

Booting into the recovery media

Preparing the disk

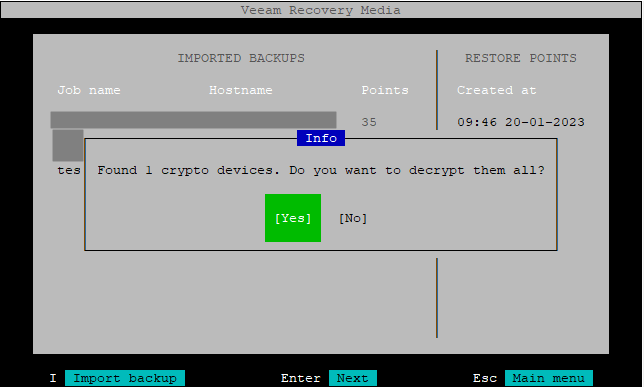

Accessing the backup

Locate the backup. In my case, I will connect to a VBR server.

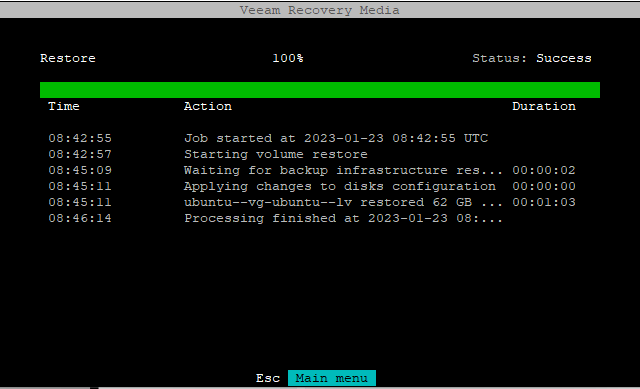

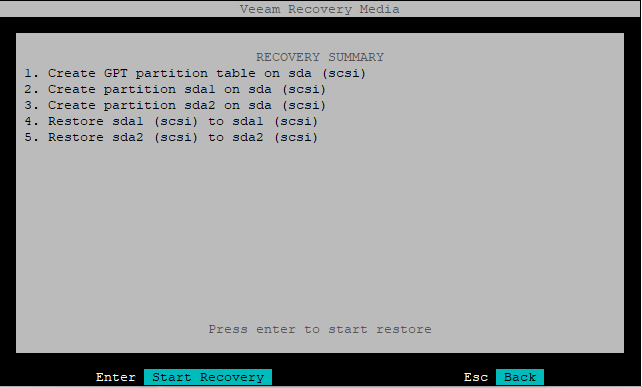

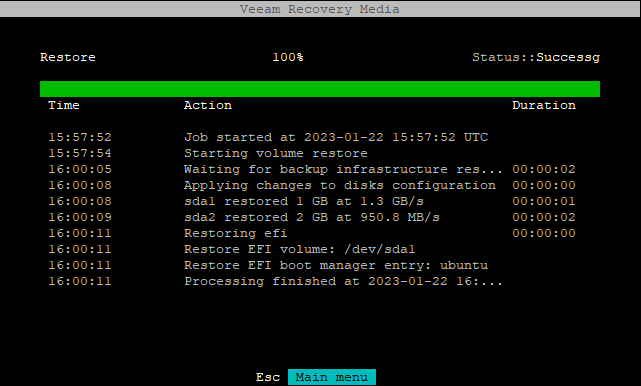

Restoring GPT partition table, EFI system partition (ESP) and /boot partition

Restore 100% Status::Successg

Time Action Duration

15:57:52 Job started at 2023-01-22 15:57:52 UTC

15:57:54 Starting volume restore

16:00:05 Waiting for backup infrastructure res... 00:00:02

16:00:08 Applying changes to disks configuration 00:00:00

16:00:08 sda1 restored 1 GB at 1.3 GB/s 00:00:01

16:00:09 sda2 restored 2 GB at 950.8 MB/s 00:00:02

16:00:11 Restoring efi 00:00:00

16:00:11 Restore EFI volume: /dev/sda1

16:00:11 Restore EFI boot manager entry: ubuntu

16:00:11 Processing finished at 2023-01-22 16:...